Веб-задание Azure иногда не может подключиться к учетной записи хранения

У нас есть веб-задание Azure, которое запускается (с атрибутом [Singleton]), и оно иногда жалуется на невозможность подключиться к учетной записи хранения, которая ему необходима для получения блокировки, или когда мы пытаемся войти в учетную запись хранения.

В журналах веб-заданий это приведет к "Неверная учетная запись хранения XXXXXX. Пожалуйста, убедитесь, что ваши учетные данные указаны правильно".

Я дважды проверил ключи доступа для учетной записи хранения и значения в строках соединения службы Azure для AzureWebJobsStorage и AzureWebJobsDashboard, а также для нашего собственного параметра приложения, который мы используем при попытке создать CloudTableClient для ведения журнала.

Это периодически и работает примерно в 80% случаев, а в 20% случаев жалуется.

Образец из журналов:

[12/02/2017 16:45:10 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage)

[12/02/2017 16:45:10 > d747a0: INFO] 12/2/2017 4:45:10 PM - Rfid processing started for Message Id 3286783.

[12/02/2017 16:45:13 > d747a0: INFO] Microsoft.ServiceBus.Messaging.BrokeredMessage{MessageId:3286783}

[12/02/2017 16:45:13 > d747a0: INFO] 12/2/2017 4:45:13 PM - Rfid processing finished for Message Id 3286783.

[12/02/2017 16:45:13 > d747a0: INFO] Singleton lock released (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage)

[12/02/2017 16:45:13 > d747a0: INFO] Executed 'GPOFunctions.ProcessQueueMessage' (Succeeded, Id=aa430942-4fcb-4fa6-a899-fe936a183494)

[12/02/2017 16:45:13 > d747a0: INFO] Executing 'GPOFunctions.ProcessQueueMessage' (Reason='New ServiceBus message detected on 'tprfid/Subscriptions/subRfidUat'.', Id=c6eb4e56-ebd0-4410-acde-4e3bd7c3666d)

[12/02/2017 16:45:13 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage)

[12/02/2017 16:45:13 > d747a0: INFO] 12/2/2017 4:45:13 PM - Rfid processing started for Message Id 3286784.

[12/02/2017 16:45:16 > d747a0: INFO] Microsoft.ServiceBus.Messaging.BrokeredMessage{MessageId:3286784}

[12/02/2017 16:45:16 > d747a0: WARN] Reached maximum allowed output lines for this run, to see all of the job's logs you can enable website application diagnostics

[12/02/2017 16:55:03 > d747a0: SYS ERR ] Job failed due to exit code -532462766

[12/02/2017 16:55:03 > d747a0: SYS INFO] Process went down, waiting for 0 seconds

[12/02/2017 16:55:03 > d747a0: SYS INFO] Status changed to PendingRestart

[12/02/2017 16:55:03 > d747a0: SYS INFO] Run script 'CHO.WebJobs.csRfid.exe' with script host - 'WindowsScriptHost'

[12/02/2017 16:55:03 > d747a0: SYS INFO] Status changed to Running

[12/02/2017 16:55:11 > d747a0: INFO] Found the following functions:

[12/02/2017 16:55:11 > d747a0: INFO] CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage

[12/02/2017 16:55:11 > d747a0: INFO] CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer

[12/02/2017 16:55:12 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer.Listener)

[12/02/2017 16:55:12 > d747a0: INFO] Executing 'GPOFunctions.ProcessQueueMessage' (Reason='New ServiceBus message detected on 'tprfid/Subscriptions/subRfidUat'.', Id=ac2385b9-2000-4533-9166-57df9fef904f)

[12/02/2017 16:55:12 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage)

[12/02/2017 16:55:13 > d747a0: INFO] 12/2/2017 4:55:13 PM - Rfid processing started for Message Id 3286815.

[12/02/2017 16:55:42 > d747a0: ERR ]

[12/02/2017 16:55:42 > d747a0: ERR ] Unhandled Exception: Microsoft.WindowsAzure.Storage.StorageException: Unable to connect to the remote server ---> System.Net.WebException: Unable to connect to the remote server ---> System.Net.Sockets.SocketException: An attempt was made to access a socket in a way forbidden by its access permissions

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.Sockets.Socket.DoBind(EndPoint endPointSnapshot, SocketAddress socketAddress)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.Sockets.Socket.InternalBind(EndPoint localEP)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.Sockets.Socket.BeginConnectEx(EndPoint remoteEP, Boolean flowContext, AsyncCallback callback, Object state)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.Sockets.Socket.UnsafeBeginConnect(EndPoint remoteEP, AsyncCallback callback, Object state)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.ServicePoint.ConnectSocketInternal(Boolean connectFailure, Socket s4, Socket s6, Socket& socket, IPAddress& address, ConnectSocketState state, IAsyncResult asyncResult, Exception& exception)

[12/02/2017 16:55:42 > d747a0: ERR ] --- End of inner exception stack trace ---

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Net.HttpWebRequest.EndGetResponse(IAsyncResult asyncResult)

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.EndGetResponse[T](IAsyncResult getResponseResult) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Executor\Executor.cs:line 284

[12/02/2017 16:55:42 > d747a0: ERR ] --- End of inner exception stack trace ---

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.EndExecuteAsync[T](IAsyncResult result) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Executor\Executor.cs:line 50

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Queue.CloudQueue.EndExists(IAsyncResult asyncResult) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Queue\CloudQueue.cs:line 994

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Util.AsyncExtensions.<>c__DisplayClass1`1.<CreateCallback>b__0(IAsyncResult ar) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Util\AsyncExtensions.cs:line 66

[12/02/2017 16:55:42 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Queues.Listeners.QueueListener.<ExecuteAsync>d__21.MoveNext()

[12/02/2017 16:55:42 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Timers.TaskSeriesTimer.<RunAsync>d__14.MoveNext()

[12/02/2017 16:55:42 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 16:55:42 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Timers.WebJobsExceptionHandler.<>c__DisplayClass3_0.<OnUnhandledExceptionAsync>b__0()

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Threading.ExecutionContext.RunInternal(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

[12/02/2017 16:55:42 > d747a0: ERR ] at System.Threading.ThreadHelper.ThreadStart()

[12/02/2017 16:55:42 > d747a0: SYS ERR ] Job failed due to exit code -532462766

[12/02/2017 16:55:42 > d747a0: SYS INFO] Process went down, waiting for 60 seconds

[12/02/2017 16:55:42 > d747a0: SYS INFO] Status changed to PendingRestart

[12/02/2017 17:03:44 > d747a0: SYS INFO] Run script 'CHO.WebJobs.csRfid.exe' with script host - 'WindowsScriptHost'

[12/02/2017 17:03:44 > d747a0: SYS INFO] Status changed to Running

[12/02/2017 17:04:13 > d747a0: ERR ]

[12/02/2017 17:04:13 > d747a0: ERR ] Unhandled Exception: System.InvalidOperationException: Invalid storage account 'sachouat'. Please make sure your credentials are correct.

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Executors.DefaultStorageCredentialsValidator.<ValidateCredentialsAsyncCore>d__2.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Executors.DefaultStorageCredentialsValidator.<ValidateCredentialsAsync>d__1.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Executors.DefaultStorageAccountProvider.<TryGetAccountAsync>d__23.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ValidateEnd(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Executors.JobHostContextFactory.<CreateAndLogHostStartedAsync>d__5.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Executors.JobHostContextFactory.<CreateAndLogHostStartedAsync>d__4.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.JobHost.<CreateContextAndLogHostStartedAsync>d__44.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.JobHost.<StartAsyncCore>d__27.MoveNext()

[12/02/2017 17:04:13 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.JobHost.Start()

[12/02/2017 17:04:13 > d747a0: ERR ] at Microsoft.Azure.WebJobs.JobHost.RunAndBlock()

[12/02/2017 17:04:13 > d747a0: ERR ] at CHO.WebJobs.csRfid.ProcessRfidXml.StartListening() in F:\agent\_work\4\s\CHO.WebJobs\CHO.WebJobs.csRfid\ProcessRfidXml.cs:line 68

[12/02/2017 17:04:13 > d747a0: ERR ] at CHO.WebJobs.csRfid.Program.Main() in F:\agent\_work\4\s\CHO.WebJobs\CHO.WebJobs.csRfid\Program.cs:line 98

[12/02/2017 17:04:13 > d747a0: SYS ERR ] Job failed due to exit code -532462766

[12/02/2017 17:04:13 > d747a0: SYS INFO] Process went down, waiting for 60 seconds

[12/02/2017 17:04:13 > d747a0: SYS INFO] Status changed to PendingRestart

[12/02/2017 17:05:14 > d747a0: SYS INFO] Run script 'CHO.WebJobs.csRfid.exe' with script host - 'WindowsScriptHost'

[12/02/2017 17:05:14 > d747a0: SYS INFO] Status changed to Running

[12/02/2017 17:05:28 > d747a0: INFO] Found the following functions:

[12/02/2017 17:05:28 > d747a0: INFO] CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage

[12/02/2017 17:05:28 > d747a0: INFO] CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer

[12/02/2017 17:05:29 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer.Listener)

[12/02/2017 17:05:29 > d747a0: INFO] Function 'CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer' initial status: Last='2017-12-02T16:54:06.7331501+00:00', Next='2017-12-02T16:55:06.7331501+00:00'

[12/02/2017 17:05:29 > d747a0: INFO] Function 'CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer' is past due on startup. Executing now.

[12/02/2017 17:05:32 > d747a0: INFO] Executing 'GPOFunctions.ProcessQueueMessage' (Reason='New ServiceBus message detected on 'tprfid/Subscriptions/subRfidUat'.', Id=15bc1f92-4def-4ba2-ba96-5c2f510ee933)

[12/02/2017 17:05:32 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessQueueMessage)

[12/02/2017 17:05:33 > d747a0: INFO] Executing 'GPOFunctions.ProcessTimer' (Reason='Timer fired at 2017-12-02T17:05:29.9786228+00:00', Id=3d6dc09b-82fb-41ae-a8d6-9d140af14945)

[12/02/2017 17:05:33 > d747a0: INFO] Singleton lock acquired (5d3cc9c4e92841579c4df47db66e5bfc/CHO.WebJobs.csRfid.GPOFunctions.ProcessTimer)

[12/02/2017 17:05:33 > d747a0: INFO] 12/2/2017 5:05:33 PM - Rfid processing started for Message Id 3286814.

[12/02/2017 17:05:55 > d747a0: ERR ]

[12/02/2017 17:05:55 > d747a0: ERR ] Unhandled Exception: Microsoft.WindowsAzure.Storage.StorageException: Unable to connect to the remote server ---> System.Net.WebException: Unable to connect to the remote server ---> System.Net.Sockets.SocketException: An attempt was made to access a socket in a way forbidden by its access permissions

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.Sockets.Socket.DoBind(EndPoint endPointSnapshot, SocketAddress socketAddress)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.Sockets.Socket.InternalBind(EndPoint localEP)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.Sockets.Socket.BeginConnectEx(EndPoint remoteEP, Boolean flowContext, AsyncCallback callback, Object state)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.Sockets.Socket.UnsafeBeginConnect(EndPoint remoteEP, AsyncCallback callback, Object state)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.ServicePoint.ConnectSocketInternal(Boolean connectFailure, Socket s4, Socket s6, Socket& socket, IPAddress& address, ConnectSocketState state, IAsyncResult asyncResult, Exception& exception)

[12/02/2017 17:05:55 > d747a0: ERR ] --- End of inner exception stack trace ---

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Net.HttpWebRequest.EndGetResponse(IAsyncResult asyncResult)

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.EndGetResponse[T](IAsyncResult getResponseResult) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Executor\Executor.cs:line 284

[12/02/2017 17:05:55 > d747a0: ERR ] --- End of inner exception stack trace ---

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.EndExecuteAsync[T](IAsyncResult result) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Executor\Executor.cs:line 50

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Queue.CloudQueue.EndExists(IAsyncResult asyncResult) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Queue\CloudQueue.cs:line 994

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.WindowsAzure.Storage.Core.Util.AsyncExtensions.<>c__DisplayClass1`1.<CreateCallback>b__0(IAsyncResult ar) in c:\Program Files (x86)\Jenkins\workspace\release_dotnet_master\Lib\ClassLibraryCommon\Core\Util\AsyncExtensions.cs:line 66

[12/02/2017 17:05:55 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Queues.Listeners.QueueListener.<ExecuteAsync>d__21.MoveNext()

[12/02/2017 17:05:55 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.ThrowForNonSuccess(Task task)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Runtime.CompilerServices.TaskAwaiter.HandleNonSuccessAndDebuggerNotification(Task task)

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Timers.TaskSeriesTimer.<RunAsync>d__14.MoveNext()

[12/02/2017 17:05:55 > d747a0: ERR ] --- End of stack trace from previous location where exception was thrown ---

[12/02/2017 17:05:55 > d747a0: ERR ] at Microsoft.Azure.WebJobs.Host.Timers.WebJobsExceptionHandler.<>c__DisplayClass3_0.<OnUnhandledExceptionAsync>b__0()

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Threading.ExecutionContext.RunInternal(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state, Boolean preserveSyncCtx)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

[12/02/2017 17:05:55 > d747a0: ERR ] at System.Threading.ThreadHelper.ThreadStart()

[12/02/2017 17:05:55 > d747a0: SYS ERR ] Job failed due to exit code -532462766

[12/02/2017 17:05:55 > d747a0: SYS INFO] Process went down, waiting for 60 seconds

[12/02/2017 17:05:55 > d747a0: SYS INFO] Status changed to PendingRestart

3 ответа

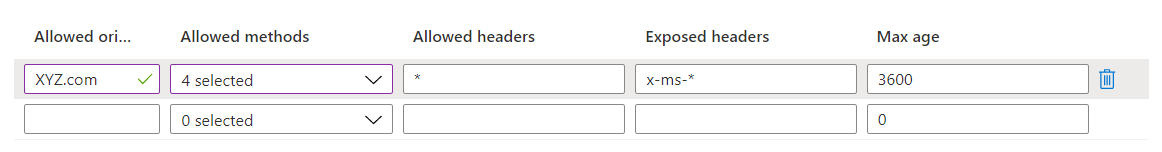

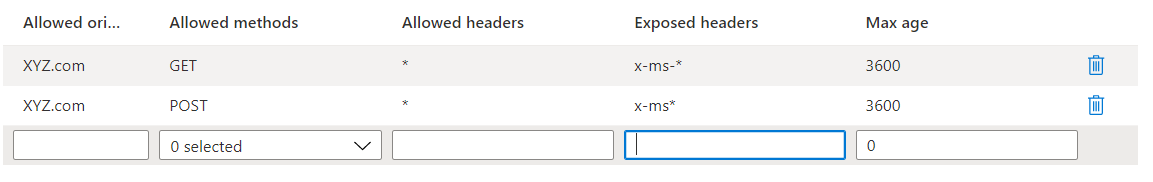

Проверить, включен ли CORS для вашей учетной записи хранения? Если есть CORS, то он будет доступен только из доменов из белого списка.

Я вижу, что вы получаете исключение, пытаясь открыть сокет для связи с хранилищем Windows. Я предполагаю, что периодически возникающая проблема связана с тем, что вы превышаете лимит исходящих соединений для веб-приложения Azure. Чтобы это исправить, попробуйте увеличить план.

Недавно мы столкнулись с аналогичной проблемой с одним из наших веб-заданий, которое раньше работало нормально, но внезапно перестало работать. Веб-задание пыталось перезапускаться каждую минуту, но сбой с сообщением об ошибке Недопустимая учетная запись хранения ABStorage . Пожалуйста, убедитесь, что ваши учетные данные верны.Веб-задание оставалось в состоянии ожидания перезапуска. После расследования мы обнаружили, что строка подключения к хранилищу работает нормально, хотя в SDK хранилища Azure есть ошибка, из-за которой правила CORS, настроенные на портале Azure для учетной записи хранения, интерпретировались неправильно. На портале Azure у нас было только одно правило CORS в хранилище Azure, в котором были выбраны все разрешенные методы HTTP. Мы обновили правило CORS в учетной записи хранения, чтобы иметь один метод HTTP для каждого домена, и веб-задание могло запускаться немедленно.