Почему я делаю "готово" сразу после загрузки контрольных точек для тестирования модели?

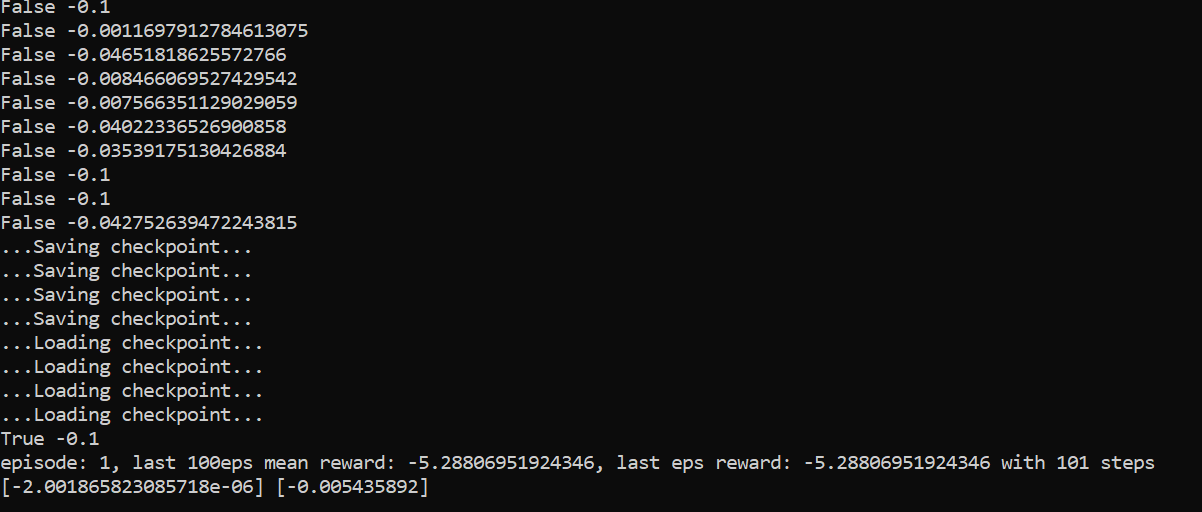

То, что получил от казни, печатаю [готово, награда]:

Когда я попытался реализовать документ td3, у меня возникла вышеуказанная проблема. После того, как я сохраняю модель и загружаю ее для тестирования, она прерывает дальнейшее обучение, превращая следующий шаг в шаг "готово". На этом эпизод заканчивается. Может ли кто-нибудь помочь мне с этим?

for episode in range(1,self.hyperparamDict['maxEpisode']+1):

obs = env.reset()

epsReward = 0

episodeestimate = 0

for i in range(self.hyperparamDict['maxTimeStep']):

isdone = False

#env.render()

act = agent.choose_action(obs,env.action_space.low, env.action_space.high)

new_state, reward, isdone, info = env.step(act)

agent.remember(obs, act, reward, new_state, int(isdone))

print(isdone,reward)

agent.learn()

epsReward += reward

obs = new_state

agent.runtime +=1

if agent.runtime %1e02 == 0 :

agent.save_models()

# it interrupts training

actualQ, predictedQ = getddpgModelEvalResult(env, self.hyperparamDict, numTrajToSample = 10)

actualreward.append(actualQ)

predictreward.append(predictedQ)

if isdone:

break

def getddpgModelEvalResult(env, hyperparamDict, numTrajToSample = 10):

tf.reset_default_graph()

totalrewards = []

agent_test = Agent(

alpha = hyperparamDict['actorLR'],

beta = hyperparamDict['criticLR'],

input_dims = [env.observation_space.shape[0]],

tau = hyperparamDict['tau'],

env = env_norm(env) if hyperparamDict['normalizeEnv'] else env,

n_actions = env.action_space.shape[0],

units1 = hyperparamDict['actorHiddenLayersWidths'],

units2 = hyperparamDict['criticHiddenLayersWidths'],

actoractivationfunction = hyperparamDict['actorActivFunction'],

actorHiddenLayersWeightInit = hyperparamDict['actorHiddenLayersWeightInit'],

actorHiddenLayersBiasInit = hyperparamDict['actorHiddenLayersBiasInit'],

actorOutputWeightInit = hyperparamDict['actorOutputWeightInit'],

actorOutputBiasInit = hyperparamDict['actorOutputBiasInit'],

criticHiddenLayersWidths = hyperparamDict['criticHiddenLayersWidths'],

criticActivFunction = hyperparamDict['criticActivFunction'],

criticHiddenLayersBiasInit = hyperparamDict['criticHiddenLayersBiasInit'],

criticHiddenLayersWeightInit = hyperparamDict['criticHiddenLayersWeightInit'],

criticOutputWeightInit = hyperparamDict['criticOutputWeightInit'],

criticOutputBiasInit = hyperparamDict['criticOutputBiasInit'],

max_size = hyperparamDict['bufferSize'],

gamma = hyperparamDict['gamma'],

batch_size = hyperparamDict['minibatchSize'],

initnoisevar = hyperparamDict['noiseInitVariance'],

noiseDecay = hyperparamDict['varianceDiscount'],

noiseDacayStep = hyperparamDict['noiseDecayStartStep'],

minVar = hyperparamDict['minVar'],

path = hyperparamDict['modelSavePathPhil']

)

agent_test.load_models()

collection_rewards = []

collection_estimation = []

for i in range(numTrajToSample):

obs = env.reset()

rewards = 0

estimated_rewards = 0

for j in range(hyperparamDict['maxTimeStep']):

trajectoryreward = []

trajectoryestimate = []

done = False

act = agent_test.choose_test_action(obs,env.action_space.low, env.action_space.high)

new_state, reward, done, info = env.step(act)

obs_array = np.reshape(obs,[1,env.observation_space.shape[0]])

act_array = np.reshape(act,[1,env.action_space.shape[0]])

estimated_rewards = agent_test.critic.predict(obs_array,act_array)

trajectoryreward.append(reward)

trajectoryestimate.append(estimated_rewards)

obs = new_state

if done:

break

collection_rewards.append(trajectoryreward)

collection_estimation.append(np.mean(trajectoryestimate))

# calculate the actual Q from reward

actualQ_mean = []

for item in range(len(collection_rewards)):

actualQ = []

temp = collection_rewards[item]

length = len(temp)

for i in range(length):

gamma_list = [hyperparamDict['gamma']**j for j in range(length)]

reward_list = temp

Q = sum([a*b for a,b in zip(gamma_list,reward_list)])

actualQ.append(Q)

temp.pop(0)

length = len(temp)

actualQ_mean.append(np.mean(actualQ))

del agent_test