AVFoundation: как обрезать и масштабировать видео?

Приложение представляет собой тип фото и видео редактор. Приложение использует ASDK. Есть ячейка, в ней могут быть разные формы других ячеек (узлов), их количество может быть от 1 до 15. Пользователь может добавлять видео в каждую ячейку, при этом он может масштабировать видео или перемещаться по ячейкам. Проблема в том, что вам нужно сделать композицию из этих видео в одно и заполнить пробел между ячейками фоном основной ячейки (например, белым). Проблема состоит в том, что некоторые видео импортируются правильно, имеют правильную форму (или форму по умолчанию, которая заполняет всю ячейку, в которой находится видео), и когда некоторые видео экспортируются в результате этих видео, нет ни одного видео, в то время как одно видео может отображаться в одной ячейке в другой в той же композиции, когда экспорт не отображается. Подскажите пожалуйста, как это можно исправить?

func videoUrlFromCell(cell: StoryPageNode, cellSize: CGSize, completion: @escaping ((_ url: URL) -> Void)) {

// Calculating size of the exported video in points. Should be multiple of 16, otherwise video exporter will return error

let renderWidth = cellSize.width - cellSize.width.truncatingRemainder(dividingBy: 16)

let renderHeight = cellSize.height - cellSize.height.truncatingRemainder(dividingBy: 16)

// Calculating size of the exported video in pixels

let screenFactor = UIScreen.main.scale

let renderSize = CGSize(width: screenFactor * renderWidth, height: screenFactor * renderHeight)

// Calculating a scale correction for views. As exported video can have a different size than cell, we need to correct all positions and sizes of media views, so videos will have correct position/size

let scaleY = screenFactor * renderHeight / cellSize.height

let scaleX = screenFactor * renderWidth / cellSize.width

let scalePoint = CGPoint(x: scaleX, y: scaleY)

var mainCompositionInst: AVMutableVideoComposition!

var mixComposition: AVMutableComposition!

// Generating a background url which will be used below each video. Background itself is a 100x100 black or white video file (probably should be 1x1) that will be reused for each video. It will be scaled and positioned according to each media node. (hack)

VideoBackgroundGenerator.backgroundVideoFilePath(storyPage: cell.page).then(on: .main) { (backgroundURL) -> Promise<UIImage> in

// Main function where all the composition happens

let tuple = self.getVideoComposition(cell, backgroundURL: backgroundURL, renderSize: renderSize, scalePoint: scalePoint)

mainCompositionInst = tuple.mainCompositionInst

mixComposition = tuple.mixComposition

// Generating an overlay image with all the static content and transparent areas instead mediaNodes with videos. This image will be placed on top of the final video composition with all the videos.

return self.overlayFromCell(cell: cell, cellSize: cellSize, overlaySize: renderSize)

}.then(on: .main) { (image) -> Promise<URL> in

// We add overlay image on top of the composition and export video

self.addOverlay(to: mainCompositionInst, size: renderSize, overlayImage: image)

return self.exportVideoSession(mixComposition, videoComposition: mainCompositionInst)

}.then({ (url) in

completion(url)

}).catch { (error) in

debugPrint(error.localizedDescription)

}

}

private func getVideoComposition(_ cell: StoryPageNode, backgroundURL: URL, renderSize: CGSize, scalePoint: CGPoint) -> (mainCompositionInst: AVMutableVideoComposition, mixComposition: AVMutableComposition) {

let pageData = cell.page

var maxVideoDuration = 1.0

let scaleX = scalePoint.x

let scaleY = scalePoint.y

let mixComposition = AVMutableComposition()

var layerInstructions = [AVMutableVideoCompositionLayerInstruction]()

// Looping through media models on the page

for (id, media) in pageData.mediaList {

guard let videoModel = media as? StoryPageMediaVideo else {

debugPrint("error", #function, #line)

continue

}

if let videoAssetUrl = videoModel.mediaUrl {

let videoAsset = AVAsset(url: videoAssetUrl)

let videoTrack = videoAsset.tracks(withMediaType: AVMediaType.video).first

// Getting the size of the video in pixels and it's orientation

var videoTrackSize = videoTrack?.resolution() ?? CGSize.zero

guard let mediaNode = cell.mediaViewFor(mediaId: id) else {

debugPrint("media node error", #function, #line)

continue

}

let videoNodeFrame = mediaNode.frame

// Get scaled size of the video node according to the scale calculated eralier

let scaledSize = CGSize(width: videoNodeFrame.width * scaleX, height: videoNodeFrame.height * scaleY)

// Calculating a scale which will give us aspect fill size for the video inside the scaled size of the media node

var aspectFillScale = self.aspectFillScale(for: videoTrackSize, in: scaledSize)

// If user scaled video somehow, we add this to our scale

if let customScale = videoModel.specificScale {

aspectFillScale *= customScale

}

// Now when we know a scale for the aspect fill - calculating the video track size (to fill the media node)

videoTrackSize = CGSize(width: aspectFillScale * videoTrackSize.width, height: aspectFillScale * videoTrackSize.height)

// Calculating distance to center of the media node

let distanceToCenter = CGPoint(x: videoTrackSize.width / 2 - scaledSize.width / 2, y: videoTrackSize.height / 2 - scaledSize.height / 2)

// Transform of the video track in the main composition

var transform = CGAffineTransform.identity

// Moving transform to the center of the media node

transform = transform.translatedBy(x: videoNodeFrame.origin.x * scaleX - distanceToCenter.x, y: videoNodeFrame.origin.y * scaleY - distanceToCenter.y)

// If user moved video somehow, calculate scaled offset

var customCenterTransformed = CGPoint.zero

if let customCenterRaw = videoModel.specificCenter {

customCenterTransformed = CGPoint(x: customCenterRaw.x * scaleX - scaledSize.width / 2, y: customCenterRaw.y * scaleY - scaledSize.height / 2)

}

// Rotate transform around center if media node is rotated (used in the FF1 templates for example).

if mediaNode.transform.rotation != 0 {

transform = transform.translatedBy(x: videoTrackSize.width/2, y: videoTrackSize.height/2)

transform = transform.rotated(by: mediaNode.transform.rotation)

transform = transform.translatedBy(x: -videoTrackSize.width/2, y: -videoTrackSize.height/2)

}

if let videoTrack = videoTrack {

let rotation = self.rotation(with: videoTrack.preferredTransform)

if rotation != 0 {

transform = transform.rotated(by: rotation)

}

}

// Apply custom center offset. We need to do this after rotation because otherwise rotation would be around a different point, not around center. See - https://stackru.com/questions/8275882/one-step-affine-transform-for-rotation-around-a-point

if customCenterTransformed != .zero {

transform = transform.translatedBy(x: customCenterTransformed.x, y: customCenterTransformed.y)

}

// Apply scale

transform = transform.scaledBy(x: aspectFillScale, y: aspectFillScale)

// Calculating the crop frame for this media node (video should not be visible outside of the media node bounds . It is calculated relative to the video transform because this is how it is applied by AVFoundation

let cropFrame = CGRect(x: (distanceToCenter.x - customCenterTransformed.x) / aspectFillScale, y: (distanceToCenter.y - customCenterTransformed.y) / aspectFillScale, width: scaledSize.width / aspectFillScale, height: scaledSize.height / aspectFillScale)

// Generate a layer instruction for background video for this media node

let layerBackgroundInstruction = backgroundLayerInstruction(mediaView: mediaNode, scaleX: scaleX, scaleY: scaleY, mixComposition: mixComposition, url: backgroundURL)

// Generate a layer instruction for the video itself

let layerInstruction = self.layerInstruction(for: videoAsset, mixComposition: mixComposition, transform: transform, cropFrame: cropFrame, trackId: Int32(id), isMuted: videoModel.isMuted, isLoop: videoModel.loopVideo)

// If video is not looped we hide it after it finishes (hack)

if videoModel.loopVideo {

maxVideoDuration = Constants.Video.videoLengthMaxInSeconds

} else {

let videoDuration = videoTrack?.timeRange.duration.seconds ?? 0

maxVideoDuration = max(maxVideoDuration, videoDuration)

let removeFromVideo = transform.scaledBy(x: 0, y: 0)

layerInstruction.setTransform(removeFromVideo, at: CMTime(seconds: videoDuration, preferredTimescale: CMTimeScale.init(exactly: Constants.Video.videoTimeScale)!))

}

layerInstructions.append(layerBackgroundInstruction)

layerInstructions.append(layerInstruction)

}

}

// Sorting videos depending on their id. Videos with a bigger id will be displayed on top of videos with a lower id (probably a hack too)

layerInstructions.sort { $0.trackID > $1.trackID }

// Set up the video instruction

let mainInstruction = AVMutableVideoCompositionInstruction()

let duration = CMTime(seconds: maxVideoDuration, preferredTimescale: CMTimeScale.init(exactly: Constants.Video.videoTimeScale)!)

mainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, duration)

mainInstruction.layerInstructions = layerInstructions

let mainCompositionInst = AVMutableVideoComposition()

mainCompositionInst.renderSize = renderSize

mainCompositionInst.instructions = [mainInstruction]

mainCompositionInst.frameDuration = CMTimeMake(1, 30)

return (mainCompositionInst, mixComposition)

}

private func exportVideoSession(_ asset: AVAsset, videoComposition: AVMutableVideoComposition) -> Promise<URL> {

let promise = Promise<URL>(on: .main) { fulfill, reject in

let uuid = UUID().uuidString

let url = self.temproraryFilesFolder.appendingPathComponent("\(uuid).mov")

let exporter = AVAssetExportSession(asset: asset, presetName: AVAssetExportPresetHighestQuality)

exporter?.outputURL = url

exporter?.outputFileType = AVFileType.mov

exporter?.videoComposition = videoComposition

exporter?.exportAsynchronously(completionHandler: {

if let error = exporter?.error {

reject(error)

} else {

fulfill(url)

}

})

}

return promise

}

/**

Creates layer instruction for a video asset

- Parameter videoAsset: video asset instance

- Parameter mixComposition: composition instance

- Parameter transform: affine transform for layer instruction

- Parameter cropFrame: crop rectangle

- Parameter trackId: composition track id

- Parameter isMuted: mute video or not

*/

private func layerInstruction(for videoAsset:AVAsset, mixComposition:AVMutableComposition, transform:CGAffineTransform, cropFrame:CGRect, trackId:Int32, isMuted:Bool, isLoop:Bool) -> AVMutableVideoCompositionLayerInstruction {

// Getting video and audio tracks from AVAsset

let assetTrack = videoAsset.tracks(withMediaType: AVMediaType.video)[0]

var assetAudioTrack:AVAssetTrack?

if !isMuted

{

assetAudioTrack = videoAsset.tracks(withMediaType: AVMediaType.audio).first

}

let exportVideoTrack = mixComposition.addMutableTrack(withMediaType: AVMediaType.video, preferredTrackID: trackId)

var exportAudioTrack:AVMutableCompositionTrack?

if assetAudioTrack != nil

{

exportAudioTrack = mixComposition.addMutableTrack(withMediaType: AVMediaType.audio, preferredTrackID: trackId)

}

var startSegmentTime = kCMTimeZero

var segmentDuration = assetTrack.timeRange.duration

let timeScale = assetTrack.naturalTimeScale

let duration = CMTime(seconds: isLoop ? Constants.Video.videoLengthMaxInSeconds : assetTrack.timeRange.duration.seconds, preferredTimescale: timeScale)

segmentDuration = CMTimeMinimum(segmentDuration, duration)

// Repeating the video if it's duration less the the max video duration

repeat {

if CMTimeAdd(startSegmentTime,segmentDuration).value > duration.value

{

segmentDuration = CMTimeSubtract(duration, startSegmentTime)

}

do {

try exportVideoTrack?.insertTimeRange(CMTimeRangeMake(kCMTimeZero, segmentDuration), of: assetTrack, at: startSegmentTime)

if let assetAudioTrack = assetAudioTrack

{

try exportAudioTrack?.insertTimeRange(CMTimeRangeMake(kCMTimeZero, segmentDuration), of: assetAudioTrack, at: startSegmentTime)

}

}

catch let error

{

print(error)

}

startSegmentTime = CMTimeAdd(startSegmentTime, segmentDuration)

} while (startSegmentTime.value < duration.value)

let videolayerInstruction = AVMutableVideoCompositionLayerInstruction(assetTrack: exportVideoTrack!)

// Some videos seems to be mirrored, hack for this kind of video. Probablt we should rework this

// if preferredTransform.c == -1 && preferredTransform.tx == 0 && preferredTransform.ty == 0 {

// preferredTransform = preferredTransform.scaledBy(x: 1, y: -1)

// fatalError("Some videos seems to be mirrored, hack for this kind of video. Probablt we should rework this")

// }

debugPrint("setTransform", "coordinate", transform.coordinate(), "cropFrame", cropFrame)

videolayerInstruction.setTransform(transform, at: kCMTimeZero)

videolayerInstruction.setCropRectangle(cropFrame, at: kCMTimeZero)

return videolayerInstruction

}

private func rotation(with t: CGAffineTransform) -> CGFloat {

// return atan2f(Float(CGFloat(t.b)), CGFloat(t.a))

let b = Float(t.b)

let a = Float(t.a)

let res = atan2f(b, a)

return CGFloat(res)

}

/**

Adds overlay image on top of a video layer

- Parameter composition: video composition to add overlay to

- Parameter size: result video size

- Parameter overlayImage: overlay image instance

*/

private func addOverlay(to composition: AVMutableVideoComposition, size: CGSize, overlayImage: UIImage) {

let overlayLayer = CALayer()

overlayLayer.frame = CGRect(x: 0, y: 0, width: size.width, height: size.height)

overlayLayer.contents = overlayImage.cgImage

overlayLayer.opacity = 1

let parentLayer = CALayer()

let videoLayer = CALayer()

parentLayer.frame = CGRect(x: 0, y: 0, width: size.width, height: size.height)

videoLayer.frame = CGRect(x: 0, y: 0, width: size.width, height: size.height)

parentLayer.addSublayer(videoLayer)

parentLayer.addSublayer(overlayLayer)

composition.animationTool = AVVideoCompositionCoreAnimationTool(postProcessingAsVideoLayer: videoLayer, in: parentLayer)

}

//MARK: - Helpers

private func aspectFillScale(for size:CGSize, in containerSize:CGSize) -> CGFloat {

let widthRatio = containerSize.width / size.width

let heightRatio = containerSize.height / size.height

let scalingFactor = max(widthRatio, heightRatio)

return scalingFactor

}

private func transformFromRect(from source: CGRect, toRect destination: CGRect) -> CGAffineTransform {

return CGAffineTransform.identity

.translatedBy(x: destination.midX - source.midX, y: destination.midY - source.midY)

.scaledBy(x: destination.width / source.width, y: destination.height / source.height)

}

/**

Video background layer for video

- Parameter mediaView: template for video part

- Parameter scaleX: scale for video

- Parameter scaleY: scale for video

- Parameter url: path for background file

- Returns : Background layer under video

*/

private func backgroundLayerInstruction (mediaView:StoryMediaNode, scaleX:CGFloat, scaleY:CGFloat, mixComposition:AVMutableComposition, url: URL) -> AVMutableVideoCompositionLayerInstruction{

let videoBackgroundAsset = AVAsset(url: url)

let videoBackgroundTrack = videoBackgroundAsset.tracks(withMediaType: AVMediaType.video).first

let videoBackgroundTrackSize = videoBackgroundTrack?.resolution() ?? CGSize.zero

debugPrint("videoBackgroundTrackSize", videoBackgroundTrackSize)

let hideBorderValue: CGFloat = 1

var transformBG = CGAffineTransform(translationX: mediaView.frame.origin.x * scaleX - hideBorderValue, y: mediaView.frame.origin.y * scaleY - hideBorderValue)

transformBG = transformBG.scaledBy(x: ((mediaView.frame.size.width+hideBorderValue)/videoBackgroundTrackSize.width) * scaleX,

y: ((mediaView.frame.size.height+hideBorderValue)/videoBackgroundTrackSize.height) * scaleY)

transformBG = transformBG.translatedBy(x: videoBackgroundTrackSize.width/2, y: videoBackgroundTrackSize.height/2)

transformBG = transformBG.rotated(by: mediaView.transform.rotation)

transformBG = transformBG.translatedBy(x: -videoBackgroundTrackSize.width/2, y: -videoBackgroundTrackSize.height/2)

return self.layerInstruction(for: videoBackgroundAsset, mixComposition: mixComposition, transform: transformBG,cropFrame:mediaView.bounds, trackId:0,isMuted:true, isLoop: false)

}

Примеры шаблонов

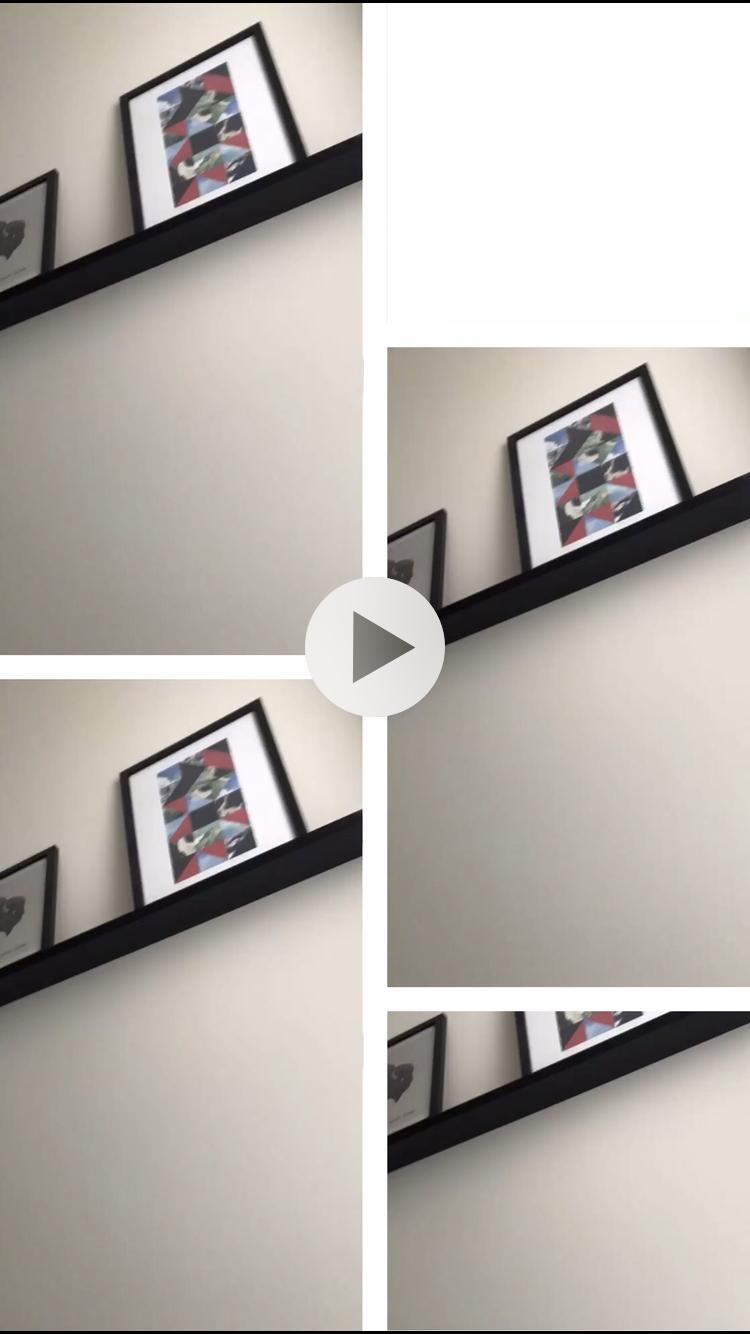

Например, результат должен быть

Но я получил  результат видео https://monosnap.com/file/SHP33UvNbJeyGw7rX9i5lTTtmXd5lx

результат видео https://monosnap.com/file/SHP33UvNbJeyGw7rX9i5lTTtmXd5lx