Объединение изображений в CameraView с наложением. (Свифт 3)?

Я вот-вот решил это. Благодаря некоторой блестящей помощи вывести меня на правильный путь. Это код, который я сейчас имею.

По сути, теперь я могу сделать изображение из нарисованного оверлея и камеры. Но пока не могу их объединить. Кажется, очень мало полезного кода, который я могу найти, который делает это просто.

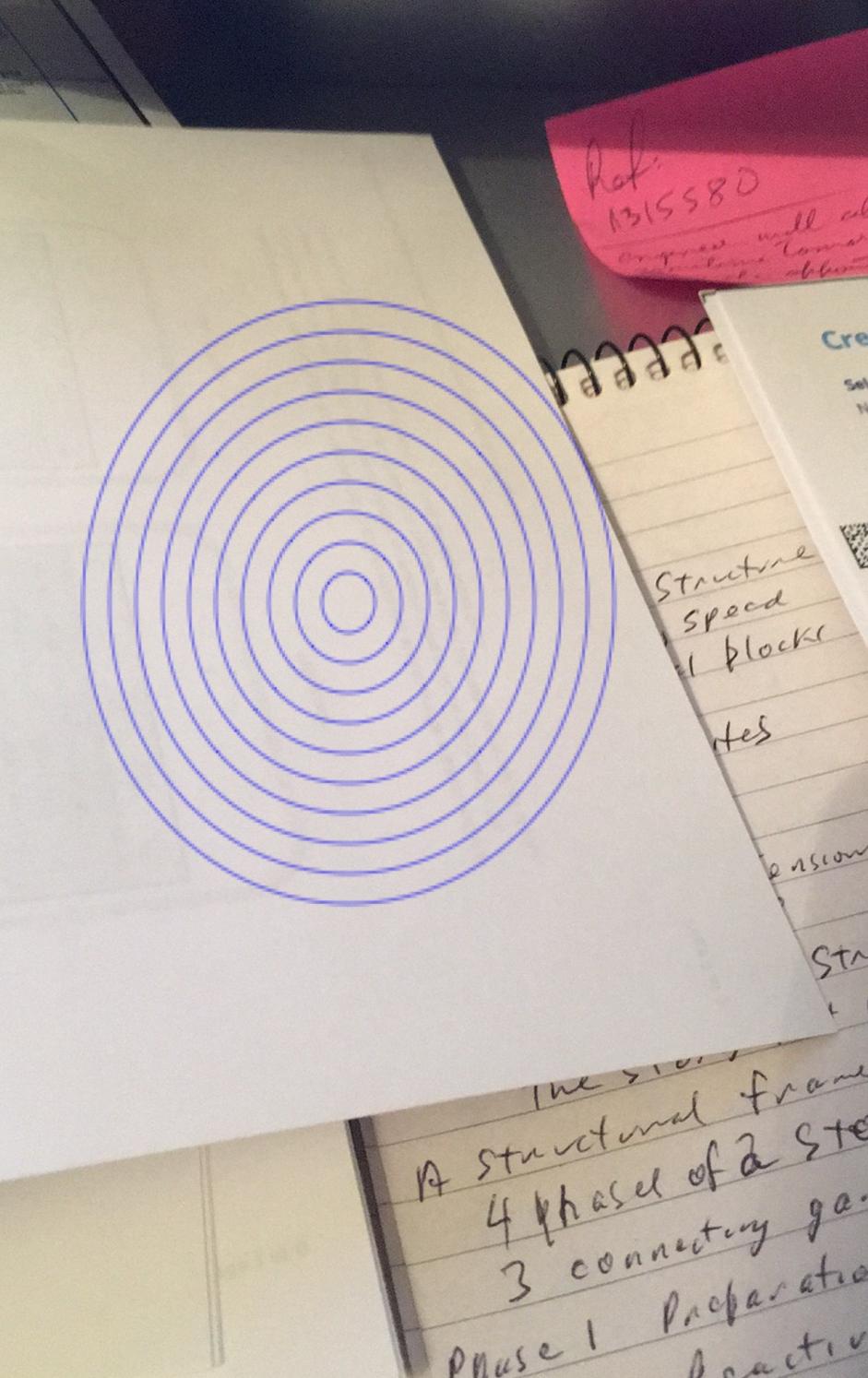

Таким образом, важной частью является расширение блока прямо вверху, а также дополнения к функции saveToCamera() в нижней части кода. Короче, у меня теперь есть два изображения, которые мне нужны, я думаю. Снимок myImage появляется на белом фоне - поэтому не уверен, что это естественно - или нет. Вот как это выглядит на симуляторе. Так что это может быть просто естественно.

Изображение 2. Сохраненное изображение myImage согласно объяснению.

import UIKit

import AVFoundation

import Foundation

// extension must be outside class

extension UIImage {

convenience init(view: UIView) {

UIGraphicsBeginImageContext(view.frame.size)

view.layer.render(in: UIGraphicsGetCurrentContext()!)

let image = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

self.init(cgImage: (image?.cgImage)!)

}

}

class ViewController: UIViewController {

@IBOutlet weak var navigationBar: UINavigationBar!

@IBOutlet weak var imgOverlay: UIImageView!

@IBOutlet weak var btnCapture: UIButton!

@IBOutlet weak var shapeLayer: UIView!

let captureSession = AVCaptureSession()

let stillImageOutput = AVCaptureStillImageOutput()

var previewLayer : AVCaptureVideoPreviewLayer?

//var shapeLayer : CALayer?

// If we find a device we'll store it here for later use

var captureDevice : AVCaptureDevice?

override func viewDidLoad() {

super.viewDidLoad()

// Do any additional setup after loading the view, typically from a nib.

//=======================

let midX = self.view.bounds.midX

let midY = self.view.bounds.midY

for index in 1...10 {

let circlePath = UIBezierPath(arcCenter: CGPoint(x: midX,y: midY), radius: CGFloat((index * 10)), startAngle: CGFloat(0), endAngle:CGFloat(M_PI * 2), clockwise: true)

let shapeLayerPath = CAShapeLayer()

shapeLayerPath.path = circlePath.cgPath

//change the fill color

shapeLayerPath.fillColor = UIColor.clear.cgColor

//you can change the stroke color

shapeLayerPath.strokeColor = UIColor.blue.cgColor

//you can change the line width

shapeLayerPath.lineWidth = 0.5

// add the blue-circle layer to the shapeLayer ImageView

shapeLayer.layer.addSublayer(shapeLayerPath)

}

print("Shape layer drawn")

//=====================

captureSession.sessionPreset = AVCaptureSessionPresetHigh

if let devices = AVCaptureDevice.devices() as? [AVCaptureDevice] {

// Loop through all the capture devices on this phone

for device in devices {

// Make sure this particular device supports video

if (device.hasMediaType(AVMediaTypeVideo)) {

// Finally check the position and confirm we've got the back camera

if(device.position == AVCaptureDevicePosition.back) {

captureDevice = device

if captureDevice != nil {

print("Capture device found")

beginSession()

}

}

}

}

}

}

@IBAction func actionCameraCapture(_ sender: AnyObject) {

print("Camera button pressed")

saveToCamera()

}

func beginSession() {

do {

try captureSession.addInput(AVCaptureDeviceInput(device: captureDevice))

stillImageOutput.outputSettings = [AVVideoCodecKey:AVVideoCodecJPEG]

if captureSession.canAddOutput(stillImageOutput) {

captureSession.addOutput(stillImageOutput)

}

}

catch {

print("error: \(error.localizedDescription)")

}

guard let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession) else {

print("no preview layer")

return

}

// this is what displays the camera view. But - it's on TOP of the drawn view, and under the overview. ??

self.view.layer.addSublayer(previewLayer)

previewLayer.frame = self.view.layer.frame

captureSession.startRunning()

print("Capture session running")

self.view.addSubview(navigationBar)

//self.view.addSubview(imgOverlay)

self.view.addSubview(btnCapture)

// shapeLayer ImageView is already a subview created in IB

// but this will bring it to the front

self.view.addSubview(shapeLayer)

}

func saveToCamera() {

if let videoConnection = stillImageOutput.connection(withMediaType: AVMediaTypeVideo) {

stillImageOutput.captureStillImageAsynchronously(from: videoConnection, completionHandler: { (CMSampleBuffer, Error) in

if let imageData = AVCaptureStillImageOutput.jpegStillImageNSDataRepresentation(CMSampleBuffer) {

if let cameraImage = UIImage(data: imageData) {

// cameraImage is the camera preview image.

// I need to combine/merge it with the myImage that is actually the blue circles.

// This converts the UIView of the bllue circles to an image. Uses 'extension' at top of code.

let myImage = UIImage(view: self.shapeLayer)

print("converting myImage to an image")

UIImageWriteToSavedPhotosAlbum(cameraImage, nil, nil, nil)

}

}

})

}

}

override func didReceiveMemoryWarning() {

super.didReceiveMemoryWarning()

// Dispose of any resources that can be recreated.

}

}

2 ответа

Попробуйте... вместо того, чтобы комбинировать ваш оверлейный вид, он рисует круги и объединяет вывод:

import UIKit

import AVFoundation

import Foundation

class CameraWithTargetViewController: UIViewController {

@IBOutlet weak var navigationBar: UINavigationBar!

@IBOutlet weak var imgOverlay: UIImageView!

@IBOutlet weak var btnCapture: UIButton!

@IBOutlet weak var shapeLayer: UIView!

let captureSession = AVCaptureSession()

let stillImageOutput = AVCaptureStillImageOutput()

var previewLayer : AVCaptureVideoPreviewLayer?

//var shapeLayer : CALayer?

// If we find a device we'll store it here for later use

var captureDevice : AVCaptureDevice?

override func viewDidLoad() {

super.viewDidLoad()

// Do any additional setup after loading the view, typically from a nib.

//=======================

captureSession.sessionPreset = AVCaptureSessionPresetHigh

if let devices = AVCaptureDevice.devices() as? [AVCaptureDevice] {

// Loop through all the capture devices on this phone

for device in devices {

// Make sure this particular device supports video

if (device.hasMediaType(AVMediaTypeVideo)) {

// Finally check the position and confirm we've got the back camera

if(device.position == AVCaptureDevicePosition.back) {

captureDevice = device

if captureDevice != nil {

print("Capture device found")

beginSession()

}

}

}

}

}

}

@IBAction func actionCameraCapture(_ sender: AnyObject) {

print("Camera button pressed")

saveToCamera()

}

func beginSession() {

do {

try captureSession.addInput(AVCaptureDeviceInput(device: captureDevice))

stillImageOutput.outputSettings = [AVVideoCodecKey:AVVideoCodecJPEG]

if captureSession.canAddOutput(stillImageOutput) {

captureSession.addOutput(stillImageOutput)

}

}

catch {

print("error: \(error.localizedDescription)")

}

guard let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession) else {

print("no preview layer")

return

}

// this is what displays the camera view. But - it's on TOP of the drawn view, and under the overview. ??

self.view.layer.addSublayer(previewLayer)

previewLayer.frame = self.view.layer.frame

imgOverlay.frame = self.view.frame

imgOverlay.image = self.drawCirclesOnImage(fromImage: nil, targetSize: imgOverlay.bounds.size)

self.view.bringSubview(toFront: navigationBar)

self.view.bringSubview(toFront: imgOverlay)

self.view.bringSubview(toFront: btnCapture)

// don't use shapeLayer anymore...

// self.view.bringSubview(toFront: shapeLayer)

captureSession.startRunning()

print("Capture session running")

}

func getImageWithColor(color: UIColor, size: CGSize) -> UIImage {

let rect = CGRect(origin: CGPoint(x: 0, y: 0), size: CGSize(width: size.width, height: size.height))

UIGraphicsBeginImageContextWithOptions(size, false, 0)

color.setFill()

UIRectFill(rect)

let image: UIImage = UIGraphicsGetImageFromCurrentImageContext()!

UIGraphicsEndImageContext()

return image

}

func drawCirclesOnImage(fromImage: UIImage? = nil, targetSize: CGSize? = CGSize.zero) -> UIImage? {

if fromImage == nil && targetSize == CGSize.zero {

return nil

}

var tmpimg: UIImage?

if targetSize == CGSize.zero {

tmpimg = fromImage

} else {

tmpimg = getImageWithColor(color: UIColor.clear, size: targetSize!)

}

guard let img = tmpimg else {

return nil

}

let imageSize = img.size

let scale: CGFloat = 0

UIGraphicsBeginImageContextWithOptions(imageSize, false, scale)

img.draw(at: CGPoint.zero)

let w = imageSize.width

let midX = imageSize.width / 2

let midY = imageSize.height / 2

// red circles - radius in %

let circleRads = [ 0.07, 0.13, 0.17, 0.22, 0.29, 0.36, 0.40, 0.48, 0.60, 0.75 ]

// center "dot" - radius is 1.5%

var circlePath = UIBezierPath(arcCenter: CGPoint(x: midX,y: midY), radius: CGFloat(w * 0.015), startAngle: CGFloat(0), endAngle:CGFloat(M_PI * 2), clockwise: true)

UIColor.red.setFill()

circlePath.stroke()

circlePath.fill()

// blue circle is between first and second red circles

circlePath = UIBezierPath(arcCenter: CGPoint(x: midX,y: midY), radius: w * CGFloat((circleRads[0] + circleRads[1]) / 2.0), startAngle: CGFloat(0), endAngle:CGFloat(M_PI * 2), clockwise: true)

UIColor.blue.setStroke()

circlePath.lineWidth = 2.5

circlePath.stroke()

UIColor.red.setStroke()

for pct in circleRads {

let rad = w * CGFloat(pct)

circlePath = UIBezierPath(arcCenter: CGPoint(x: midX, y: midY), radius: CGFloat(rad), startAngle: CGFloat(0), endAngle:CGFloat(M_PI * 2), clockwise: true)

circlePath.lineWidth = 2.5

circlePath.stroke()

}

let newImage = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

return newImage

}

func saveToCamera() {

if let videoConnection = stillImageOutput.connection(withMediaType: AVMediaTypeVideo) {

stillImageOutput.captureStillImageAsynchronously(from: videoConnection, completionHandler: { (CMSampleBuffer, Error) in

if let imageData = AVCaptureStillImageOutput.jpegStillImageNSDataRepresentation(CMSampleBuffer) {

if let cameraImage = UIImage(data: imageData) {

if let nImage = self.drawCirclesOnImage(fromImage: cameraImage, targetSize: CGSize.zero) {

UIImageWriteToSavedPhotosAlbum(nImage, nil, nil, nil)

}

}

}

})

}

}

override func didReceiveMemoryWarning() {

super.didReceiveMemoryWarning()

// Dispose of any resources that can be recreated.

}

}

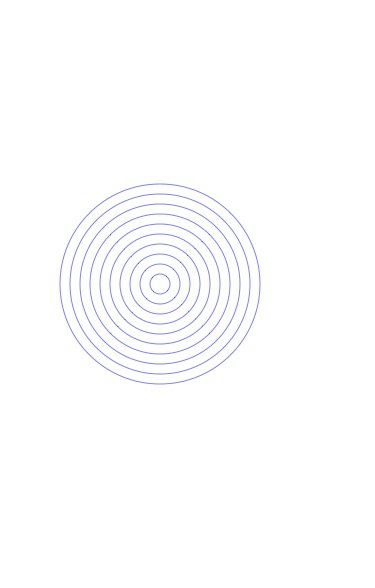

Хорошо, решил это в значительной степени. Важный код здесь. Полученное изображение слегка искажено, но я поработаю над этим и исправлю это, если только кто-то не увидит хорошее исправление.

func saveToCamera() {

if let videoConnection = stillImageOutput.connection(withMediaType: AVMediaTypeVideo) {

stillImageOutput.captureStillImageAsynchronously(from: videoConnection, completionHandler: { (CMSampleBuffer, Error) in

if let imageData = AVCaptureStillImageOutput.jpegStillImageNSDataRepresentation(CMSampleBuffer) {

if let cameraImage = UIImage(data: imageData) {

// cameraImage is the camera preview image.

// I need to combine/merge it with the myImage that is actually the blue circles.

// This converts the UIView of the bllue circles to an image. Uses 'extension' at top of code.

let myImage = UIImage(view: self.shapeLayer)

print("converting myImage to an image")

let newImage = self.composite(image:cameraImage, overlay:(myImage), scaleOverlay:true)

UIImageWriteToSavedPhotosAlbum(newImage!, nil, nil, nil)

}

}

})

}

}

func composite(image:UIImage, overlay:(UIImage), scaleOverlay: Bool = false)->UIImage?{

UIGraphicsBeginImageContext(image.size)

var rect = CGRect(x: 0, y: 0, width: image.size.width, height: image.size.height)

image.draw(in: rect)

if scaleOverlay == false {

rect = CGRect(x: 0, y: 0, width: overlay.size.width, height: overlay.size.height)

}

overlay.draw(in: rect)

return UIGraphicsGetImageFromCurrentImageContext()

}

Полученное сохраненное изображение.